Align with Me, Not TO Me: How People Perceive Concept Alignment with LLM-Powered Conversational Agents

Shengchen Zhang, Weiwei Guo, Xiaohua Sun

CHI’25 EA

摘要/Abstract

概念对齐——建立概念的共同理解——对于人类与代理人的沟通至关重要。虽然大型语言模型(LLMs)为对话代理提供了类似人类的对话能力,但由于缺乏对人们关于概念对齐感知和期望的研究,阻碍了有效 LLM 代理的设计。本文介绍了两项实验室研究的结果,分别涉及人与人以及人与代理人的配对,并使用了概念对齐任务。定量和定性分析揭示了可能(不)有助于对话的行为,人们如何感知和适应代理,以及他们的先入之见和期望。通过这项工作,我们展示了概念对齐的协同适应性和协作性,并确定了潜在的设计因素及其权衡,勾勒出概念对齐对话的设计空间。最后,我们呼吁在上下文中理解 LLMs 的概念对齐的设计师努力,并提出结合理论指导和 LLM 驱动方法的技术努力。

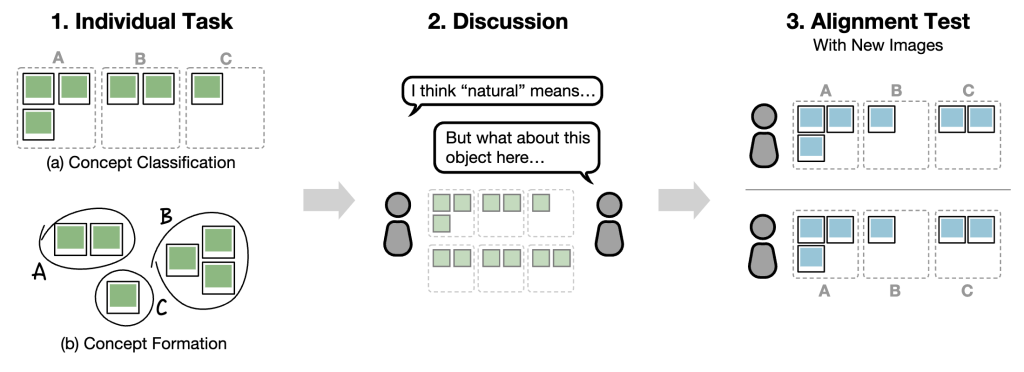

Concept alignment—building a shared understanding of concepts—is essential for human and human-agent communication. While large language models (LLMs) promise human-like dialogue capabilities for conversational agents, the lack of studies to understand people’s perceptions and expectations of concept alignment hinders the design of effective LLM agents. This paper presents results from two lab studies with human-human and human-agent pairs using a concept alignment task. Quantitative and qualitative analysis reveals and contextualizes potentially (un)helpful dialogue behaviors, how people perceived and adapted to the agent, as well as their preconceptions and expectations. Through this work, we demonstrate the co-adaptive and collaborative nature of concept alignment and identify potential design factors and their trade-offs, sketching the design space of concept alignment dialogues. We conclude by calling for designerly endeavors on understanding concept alignment with LLMs in context, as well as technical efforts to combine theory-informed and LLM-driven approaches.

相关信息/Info

作者/Authors

链接/Link

Shengchen Zhang, Weiwei Guo, Xiaohua Sun

https://dl.acm.org/doi/10.1145/3706599.3720126